Notes

I’ve never invested much time in understanding how operating systems work. It has never been on my interests list, nor have I considered it useful knowledge for my career. What can I say? We all make bad decisions in our lives.

Over the last two years, I have invested a lot of time in understanding how some things work in Linux. Those are the consequences (or benefits?) of working with a complex and low-level system like a database.

This week, I was working on tracking data that was read from the page cache. While debugging some ClickHouse metrics directly collected from the Kernel, I discovered the /proc/thread-self directory1.

/proc/thread-self

When a thread accesses this magic symbolic link, it resolves to the process’s own /proc/self/task/[tid] directory.

So /proc/thread-self points to the proc folder for the current thread. In those folders, you can find a lot of helpful information. In my case, I was interested in /proc/thread-self/io 2, where you have IO statistics.

I was focused on investigating whether the Kernel reported bytes read from S3 inside rchar. I shared more information in this PR in ClickHouse repository. Despite the PR being closed, the examples I shared there still hold valuable insights and a reproducer that can contribute to the collective understanding of the system.

Footnotes

-

There is also a

/proc/selffor the current process. This is something I didn’t know either. ↩ -

https://docs.kernel.org/filesystems/proc.html#proc-pid-io-display-the-io-accounting-fields ↩

It’s quite common to find folks sharing papers on minor breakthroughs in areas like NLP, Computer Vision, Machine Learning, Deep Learning, and related fields here.

We’ve carefully gathered a collection of papers focused on databases, distributed systems, and data in general. We’ve explored the latest developments in these areas and gained valuable insights from the many articles in our list.

Some of these publications were suggested to us after we made our list public, adding to the richness of our curated collection.

- MapReduce: Simplified Data Processing on Large Clusters

- Spark: Cluster Computing with Working Sets

- Kafka: a Distributed Messaging System for Log Processing

- Dremel: Interactive Analysis of Web-Scale Datasets: A paper describing the technology behind Google BigQuery

- Procella: Unifying serving and analytical data at YouTube

- The Log: What every software engineer should know about real-time data’s unifying abstraction: Not a paper but an article from one of the Kafka creators. He explains the basic data structure, key for many databases, and modern distributed systems.

- Making reliable distributed systems in the presence of software errors

- Conflict-free Replicated Data Types (CRDT)

- Delta State Replicated Data Types

- Time, Clocks and the Ordering of Events in a Distributed System

- Dynamo: Amazon’s Highly Available Key-value Store

- Linearizability: A Correctness Condition for Concurrent Objects

- Space/Time Trade-offs in Hash Coding with Allowable Errors: Not especially interesting reading but had to mention Bloom Filters and this is the original paper. Perhaps the most surprising data structure I discovered working with data.

- Designing Data-Intensive Applications: The Big Ideas Behind Reliable, Scalable, and Maintainable Systems: Not a paper but a book. THE book for anyone interested in databases, data, and distributed systems.

- Naiad: A Timely Dataflow System: Precursor paper of Materialize

- The Snowflake Elastic Data Warehouse

- The Dataflow Model: A Practical Approach to Balancing Correctness, Latency, and Cost in Massive-Scale, Unbounded, Out-of-Order Data Processing

When a query or transaction acquires a lock on a table, the lock remains for the duration of the query or transaction. Other queries or transactions that are waiting to acquire the same lock are blocked.

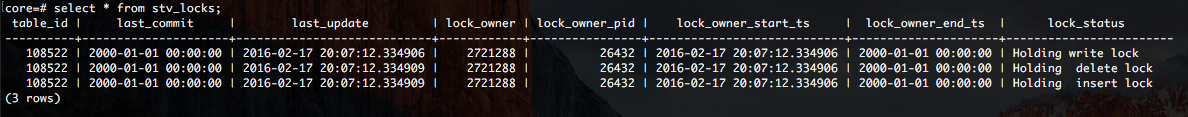

When you take a look to Redshift documentation they recommend you using STV_LOCKS, which results on:

It seems really useful until you have a real database lock. Last month I was trying to solve a lock that was blocking lots of processes. Finally, I found a better way to locate the queries that are causing locks:

Here you have the query itself:

SELECT current_time,

c.relname,

l.database,

l.transaction,

l.pid,

a.usename,

l.mode,

l.granted

FROM pg_locks l

JOIN pg_catalog.pg_class c ON c.oid = l.relation

JOIN pg_catalog.pg_stat_activity a ON a.procpid = l.pid

WHERE l.pid <> pg_backend_pid();